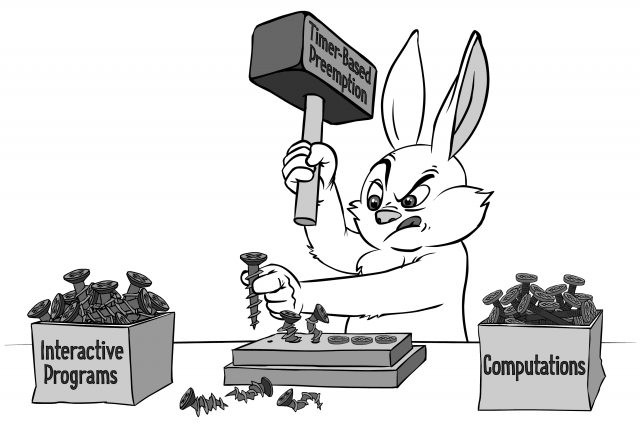

it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail.”

— Abraham Maslow, The Psychology of Science, 1966 —

Continued from Part I. Changes in IT Over Last 50 Years and Part II. Desirable Improvements

Now, as we discussed both “what we have got in IT since times of Multics” and “which improvements we would like to get from an OS”, we can try to stitch them together. Of course, there is a myriad of ways how to do it, so I have to resort to The Dark Art of Engineering(tm) to draw one particular picture; moreover, I’m entering a minefield of thinking aloud – where nothing is carved in stone and everything can be questioned. As it was noted in Part I, please do NOT hesitate to articulate your concerns about the proposed design (well, still only ideas in this Part III) – but OTOH, please make sure to be specific with your criticism and try to refrain from non-arguments such as “hey, it is different from what is done for 50 years so it must be wrong”.

Basic Idea #1. First-Class Event-Driven Programming – Now For Phones/Desktops/Servers Too!

You knew this was coming, Pete

— Harry Osborn from Spiderman 3 —

If you are familiar with my previous writings, I am sure you knew this was coming <wink />.

As it was discussed in Part I, there are lots and lots of Interactive Programs out there, with these programs doing nothing but processing events. On the other hand, deep at the low level, all modern CPUs are interrupt-driven, and interrupts are just one type of events.

Still, most of existing operating systems will process an incoming interrupt – and will pass the data from the interrupt to one of heavy-weight indefinitely-running preemptable-by-timer Linux-/Windows-like threads. Then the processing will be further passed to more heavy-weight threads until it reaches an app-level thread; the app-level thread will run an event loop, with event loop waiting for something like select()/poll()/epoll()/kevent()/WaitForMultipleObjects()/etc. – and calling app-level event handlers. In other words, what happens in such a very popular scenario is that we have events at the low level, and event handlers at the app level, but for some reason1, we’re introducing a concept of indefinitely-running preemptable-by-timer heavy-weight thread in between those events and event handlers. Not only such heavy-weight threads should be cut away by the Occam’s Razor, but also they cause unnecessary thread context switches (and potentially data passing between different cores), which is known to be Darn Expensive(tm)2

What I’d clearly like to see in the OS, is <heresy>an ability to run purely event-driven programs without any long-running preemptable-by-timer threads at all</heresy>.

Being a bit more specific (and also taking into account that Shared-Nothing is a Good Thing(tm)), I would clearly like to see an option to run my purely event-driven app in the following manner:

-

everything in the system is a Finite State Machine (FSM), which has its own state and processes incoming events.

- All communications between FSMs are done via messages; there is no such thing as memory shared between FSMs3

- As there is no such thing as shared memory -> it means that there is no need to any kind of inter-thread synchronization4

- How state transition functions of our FSMs are implemented, doesn’t matter much from our current perspective. In particular, (a) a table-driven FSM (with state transition function represented as a table), (b) an ad hoc FSM (with state transition function directly coded in an imperative/OO/… programming language, modifying current state on the fly), (c) a hierarchical state machine (HSM) (see, for example, [Samek]), and (d) a functional FSM with state transition function expressed in a functional programming language (taking old_state and event as inputs, and returning new_state) – all (a)-(d) are perfectly fine for our purposes.5

- All communications between FSMs are done via messages; there is no such thing as memory shared between FSMs3

- event handlers of FSMs are completed pretty fast. This is just by the very definition of our program being Interactive: even with modern web sites, if we process a request longer than for 1 second, we usually end up in quite a trouble.6 This is a far cry from my days on a VM/370 box when we submitted a program and got our results the next day – if we were lucky, that is.

- if blocking is necessary – event handler effectively sends a request to that would-be-blocking operation and terminates, staying essentially non-blocking (using some kind of callback to process return – ranging from a simple waiting for another event all the way to await-based non-blocking code, for more discussion see [NoBugs])

- event handlers are allowed to Run-to-Completion at least unless an event handler of a higher-priority FSM comes in

- there is no timer-based pre-emption for event handlers. This raises a question of “how to protect our system from run-away handlers” – but as we’ll see in Part IV, answering it doesn’t necessarily mean going into timer-based pre-emption.

- Timer-based pre-emption may be still necessary (especially if long-running background tasks are required by our app), but they’re inherently optional.

What is supposedly a relatively new thought which I am trying to convey here, is that

- while the main practical reason to use such an approach on MCUs7 is not applicable to high-end CPUs,

- still, for high-end CPUs there is a different reason which pushes us towards the same approach with event handling being separated from universal-hammer heavy-weight threads: it is avoiding the cost of inter-thread context switch (which tends to be ultra-high exactly for high-end CPUs). And if we always run our short-living event handlers upon completion – we’re keeping the number of thread context switches (and even more importantly, the number of cache re-populations and data transfers between cores) to the absolute minimum possible.

- in addition, giving up on long-running threads allows to avoid thread sync, which in turn enables such things as determinism (more on it below) and scalable and highly-efficient Shared-Nothing architectures. This stands both for MCUs and higher-end CPUs.

- And of course, Occam’s Razor still applies to both MCUs and high-end CPUs.

Bottom line:

- having event-driven processing as first-class citizens of the OS is already becoming more and more popular in the embedded world and RTOS’s (mostly MCU-based stuff)

- however, it will also benefit phone/desktop/server OS’s intended for high-end CPUs

- from the point of view of our Desirable Improvements from Part II, our Idea #1 enables Improved Stability (as there is no horribly error-prone thread sync), and Improved Performance (if you’re still in doubt, there is a reason why non-blocking nginx outperforms Apache); in addition, it enables Determinism (see below) – which in turn is necessary to achieve quite a few of the other Desirable Improvements.

BTW, wherever desirable, we still can implement Shared-Memory access and thread-sync primitives such as mutexes within our FSMs; it is just that all mutex-related calls (just as all the calls in our FSMs), have to be non-blocking – which is perfectly implementable. Still, Shared-Memory prevents from obtaining several very important properties (from Determinism with all its goodies to safety guarantees), so we’ll keep all the Shared-Memory-related stuff in a separate API group (more on API groups below), and will actively discourage its use beyond Legacy apps.

1 mostly because we have a hammer named “pre-emptable thread” so anything else looks as a nail

2 as it was discussed in Part I, thread context switch may cost up to a million CPU cycles, which is a Damn Lot(tm)

3 in practice, there will be certain scenarios which do require memory sharing; however, most of them can be structured and provide guarantees without going into app-level thread sync; examples of such techniques include Reactors-with-Extractors [DDMoGv2], RCU, or Midori-style static analysis [Duffy]. Still, the vast majority of programs will follow a strict Shared-Nothing model – and will be able to benefit from its goodies

4 beyond what is necessary to implement queues, but they’re completely hidden from the app level

5 for (d) to be anywhere efficient over large states, a special compiler will probably have to be written to reduce copying, but fortunately, this is not our problem now

6 long-running calculations do happen – but usually not within the realm of the Interactive Programs

7 which is mostly “lack of memory space to handle multiple stacks on a limited-RAM non-MMU MCU”

Basic Idea #2. 2+2==4, On Each and Every Program Run. Determinism.

It is quite funny that while all the instructions of our CPUs are deterministic8 – our programs are not. This causes lots of trouble, including, but not limited to, lack of testability (sic! for details, see [Fowler]); in addition, lots of determinism-related goodies become possible as soon as we have deterministic behavior of our programs.

However, for our wannabe-OS-design, as we already said that all we have is FSMs and nothing but FSMs – well, it is feasible to make them deterministic;9 moreover, our FSMs can (and I say should) be made deterministic in a perfectly practical sense:

If admin of the system checks ‘recording’ checkbox for an app-level (or driver-level) FSM in our OS, the OS starts to log all the events – and all the values returned from system calls – for this particular FSM. Then, this log can be replayed on any machine,10 producing exactly the same results as it happened during the original run

How to implement it is a fairly long story (see [Hare] for a long discussion on it, including Call Wrapping and Circular Buffering with associated Serialization), the most important thing is that it can be done; moreover, for many practical cases recording will be fast enough to be enabled in production.11

And as soon as we have our FSM deterministic in the sense mentioned above, not only it means that it became testable (which is a Big Thing(tm) per se) – but also it enables the following goodies:

- Post-mortem Debugging. With logging/replay, we can take the log from the production after the crash has happened – and then replay it to our heart’s content on a developer box in our development lab. The Big Fat Hairy Idea(tm) here is that we will get not only the state when the program crashed (which is usually FUBAR) but the sequence of events over last N minutes of the program work – the events which likely led to the crash. This alone is such a Big Thing(tm) for fixing production bugs, that its importance cannot possibly be overestimated.

- BTW, Post-mortem Debugging can be used not only after crash/assert; for example, in [Aldridge] the log was taken at the point when the user pressed the button “the game is lagging right now”. It allowed them to improve the user experience while reducing game traffic by 80% (sic!).

- Replay-based Regression Testing. The idea is to run log from production over the new version of the code, and see if the new version still functions as expected. In practice, it is a tricky one and is not a universal silver bullet – but can be used most of the time to find weird regressions in unexpected places; in practice, it happens to be especially valuable in case of serious “pure refactoring”, but apparently, has its uses in usual development too. For details, see [DDMoGv2].

- One related thing is testing for Cross-Platform Equivalence (running events from one platform on another one).

- Fault Tolerance. As long as we run our FSM on one box, and can store the log on another box – bingo! we got fault tolerance for free (and as a side benefit, it is of low-latency kind, like virtual lockstep and unlike currently-used-by-VMs such as VMWare and Xen checkpoint-based fault tolerance mechanisms).

- Low-Latency Node Migration. As soon as we can Serialize state of our FSM, it can be migrated to a different box very easily; however, as a side benefit of being deterministic, deterministic FSMs can be migrated in a low-latency manner.

- Other (admittedly rather esoteric) stuff such as incremental backup via logging events, and ability to re-run the same inputs over the fixed code to see the difference in the outcome.

As we can see, Determinism enables lots of Desirable Improvements from Part II, in particular Post-Mortem Debugging, Fault Tolerance, and Node Migration (which is a prerequisite for Load Balancing and Scalability); also, additional means of testing will certainly help to improve app/driver Stability. Overall, IMNSHO benefits of being deterministic are so large that they alone would justify switching to Shared-Nothing deterministic FSMs.

8 that is, if we forget about timings, inter-thread races, and RNG instructions

9 note that this feasibility relies on FSMs being Shared-Nothing; making Shared-Memory mutex-based design deterministic, while theoretically possible, most of the time is not feasible

10 as long as it runs exactly the same executable as was run originally

11 of course, there will be a performance hit for logging, but if we can limit the hit to something like 30% rather than to 30x, it will be good enough for lots and lots of practical cases

Basic Idea #3. OS != kernel, OS == API. Same Apps/Drivers, Different Kernels

Traditionally, “OS” is thought in terms of its kernel. However, it is more or less common knowledge that the same kernel cannot efficiently cover all the use cases ranging from $1 MCUs to high-end server-side CPUs: we’ll have a full-scale comparison at the end of Part IV, but for now it will suffice to say that for $1 MCUs a Linux/Windows-style timer-based pre-emptive kernel is known to be way too heavy, and for client-side devices on high-end CPUs, which tend to run tons of highly-buggy apps, timer-based pre-emption is a serious requirement, so non-preemptive MCU-style kernel won’t really do.

This means that if we firmly fix a set of our APIs, but at the same time we allow very different kernels to implement these APIs, we can enable really really good coverage for a really wide spectrum of devices. This approach is known to work well in the embedded industry ([Samek], see also later-added [QXK]), but there are no reasons why it cannot be extended onto larger CPUs too.

Let’s note that wherever necessary, legacy inter-thread synchronization primitives (such as mutexes/semaphores) can be implemented on top of any of the kernels (even a perfectly non-preemptive Run-to-Completion kernel can implement mutexes),

This approach is instrumental for achieving our Desirable Improvement of having the same app/driver code for all the MCU/CPU range from $1 MCU to heavy-weight dozens-of-cores CPUs.

Basic Idea #4. Less Is More. Explicitly-Specified API Groups Enable APIs which are BOTH Bare-Necessities AND High-Level.

Look for the bare necessities

The simple bare necessities

Forget about your worries and your strife

I mean the bare necessities

Old Mother Nature’s recipes

That brings the bare necessities of life

— The Jungle Book —

The next basic idea is related to the question of APIs provided by OS to apps/drivers. In this regard, I’ll be facing a kinda dilemma.

On the one hand, I really really disagree that functionality such as parsing BMP file belongs to an OS. Badly bloated APIs (such as those in Windows) may be considered good by MS management, but with all due respect to vendors having to make some money, Vendor Lock-In is clearly a Bad Thing(tm) for a multitude of reasons.12

As a result, I’ll be looking for an extremely limited set of APIs – in essence, we’re speaking about our APIs being Bare Necessities APIs.

As a result, I firmly believe that APIs should be (a) minimalistic and (b) high-level. On the first glance, it causes quite a bit of conflict; for example, should we have read_config() stuff within OS or not? If yes, we’d deviate from being minimalistic, and if no, we’d deviate from providing high-level APIs.

My gut feeling tells me that it should be solved by having

well-defined groups of APIs, with app writer being responsible for explicitly specifying which groups it wants to use

For example, for the server side, there can be API groups ‘read config’, ‘log’, ‘TCP sockets’, ‘HTTP socket’, ‘MySQL socket’14, and ‘direct file access’, and it should be my responsibility as an app writer to say explicitly ‘hey, I want to use config, log, and HTTP and MySQL sockets, but I don’t want direct file access and bare TCP sockets’. This will facilitate the following:

- we have APIs which are both minimalistic and high-level. Sure, it is up to app writer to make its APIs really minimalistic (by giving up direct use of not-really-necessary stuff), but at least it becomes possible.

- BTW, having our APIs minimalistic allows supporting them at different levels – for example, nothing should prevent kernel driver from using logging API, or file access API (well, as long as it doesn’t create circular dependencies).

- those apps which are not using unnecessary APIs, get the benefit of being able to be run on a wider spectrum of systems (and/or more efficiently – see discussion above re specialized file systems and/or diskless servers).

- we can enforce using group restrictions both in compile-time (more on integrating compilers in Basic Idea #4) and using run-time control (similar to that of existing OS’s)

- for those apps which are avoiding unnecessary API groups, enforcing security becomes a cinch. No more SELinux-like stuff-which-we-usually-have-to-learn-in-runtime along the lines of “hey, this app can write to this specific dir” (where it actually writes logs) and “it also can read another dir” (which actually contains config); rather, it is “hey, this app can access its own config and can write its own logs”, which should be specified in the executable itself as a simple declaration, and is also much simpler and much less error-prone. As a side bonus, protocol-level detectors/IDLs become much more feasible too (as OS already has information that “this socket, not using port 80 or anything, is still supposed to use HTTP, and nothing but HTTP”, “this other socket is using MySQL and nothing but”, and “the app is not allowed to use APIs which create bare UDP/TCP/raw sockets at all”).

From the point of view of our Desirable Improvements from Part II, this Basic Idea #4 enables Flexibility and Same Code over MCUs/CPUs, and additionally facilitates Improved Performance (as it enables transparent-to-app specialized deployments mentioned above).

12 at the very least, Vendor Lock-In is bad for everybody besides vendor, but I’d argue that in the long run, it is detrimental even for vendor itself

13 while diskless servers are possible under existing OS’s, most of the time they create RAMdisk, and then use memory mapping over this RAMdisk, with logs picked up by some background process – which works, but causes a significant waste of resources on the way

14 probably via some kind of OS plugin/driver

Basic Idea #5. Being Gotcha-ed in Compile-Time. Integrating Compiler/Static Analysis

I’ve been gotcha-ed

— Garfield The Cat —

- for some boxes, we can say that all the apps should come in source form (not a problem at least for open source), and we will compile them with our own trusted compiler, which will try to eliminate certain classes of attacks and/or check for proof of non-existent memory corruption etc. etc..

- we can say that there is a trusted box with a trusted compiler somewhere else, and this compiler will sign compiled executables, and which signature we can check via PKI before allowing to run an app.

- for any of the scenarios above, we can either combine static checks with existing OS-level protections (to improve security), or to use them as a pre-requisite to disable certain OS-level protections (for example, by moving certain app within Ring 0) – to improve performance.

- in addition, we can task compiler with things such as support for split stack frames (of splittable-on-interrupt variety); more on it in upcoming Part IV

Mapping Static Analysis onto our Desirable Improvements from Part II, we can say that integrating/enforcing static checks will help to Improve Security and/or Performance, and will allow improving app/driver Stability too.

In a sense, this approach is somewhat similar to experimental systems such as [JX] and [Midori]; however, previously all such efforts-I-know-about, were based on enforcing one single managed programming language across the whole OS – which (a) won’t realistically work for lower-end MCUs – which violates one of our Desirable Improvements, (b) there will be problems making GC work for real-world RTOS,15 (c) creates an Absolute Dependency a.k.a. Vendor Lock-In on one single programming language16, and most importantly (d) never flew in practice.

15 Well, in theory you can have a deterministic GC such as Metronome, but it has its own limitations – and is not exactly used on an anywhere large scale

16 and most of the time there is a Huge Company behind this language fighting to make it The Only Language In Existence(tm) <sad-face />

To Be Continued…

References

[Duffy] Joe Duffy, “15 Years of Concurrency”

[DDMoGv2] ‘No Bugs’ Hare, “Design and Development of Multiplayer Online Games, vol. 2”, 2019

[NoBugs] ‘No Bugs’ Hare, “Eight Ways to Handle Non-Blocking Returns in Message-Passing Programs”, CPPCON2017

[TanenbaumBos] Andrew S. Tanenbaum, Herbert Bos, “Modern Operating Systems, 4th Edition”

, Pearson, 2015

, Pearson, 2015[QXK] “Preemptive 'Dual-Mode' QXK Kernel”

[Midori] “Midori”, Wikipedia

[Fowler] Martin Fowler, “Eradicating Non-Determinism in Tests”

[Hare] 'No Bugs' Hare, “Deterministic Components for Interactive Distributed Systems”, ACCU2017

[Aldridge] David Aldridge, “I Shot You First: Networking the Gameplay of HALO: REACH”, GDC2011

[memory-safe-cpp] “memory-safe-cpp”

[Samek] Miro Samek, “Practical UML Statecharts in C/C++ 2nd Edition”

[JX] “JX”, Wikipedia

Acknowledgement

Cartoons by Sergey Gordeev

from Gordeev Animation Graphics, Prague.

from Gordeev Animation Graphics, Prague.P.S.

Don't like this post? Criticize↯

P.P.S.

We've tried to optimize our feed for viewing in your RSS viewer. However, our pages are quite complicated, so if you see any glitches when viewing this page in your RSS viewer, please refer to our original page.